Wed Dec 27 23:52:30 2023

(*71144c1d*):: Does anyone here have experience running large models locally on multi-GPU setups? I’ve heard this works out of the box with some runners, (e.g. 2x 24GB card should be able to run a 65B Q4 model just like a 40GB card), but I’m curious about the performance hit, if it even is possible.

(*4cfb807c*)::

(*6952cd93*):: +public!

(*75de6f1e*):: So I bought a condo in the same building as Joe.. and I’m trying to think what to put on the walls. I was thinking Surrealist prints but now I’m thinking I’ll just make my own with midjourney and paying a nice print shop.. lol

(*6952cd93*):: Dude that would be so sick. You def have to go the AI mural wallpaper route, the big monolithic walls are perfect for it

(*6952cd93*):: The other option is to pay a local artist. I did that for the small painting I have on my wall but he left town before he could finish lol

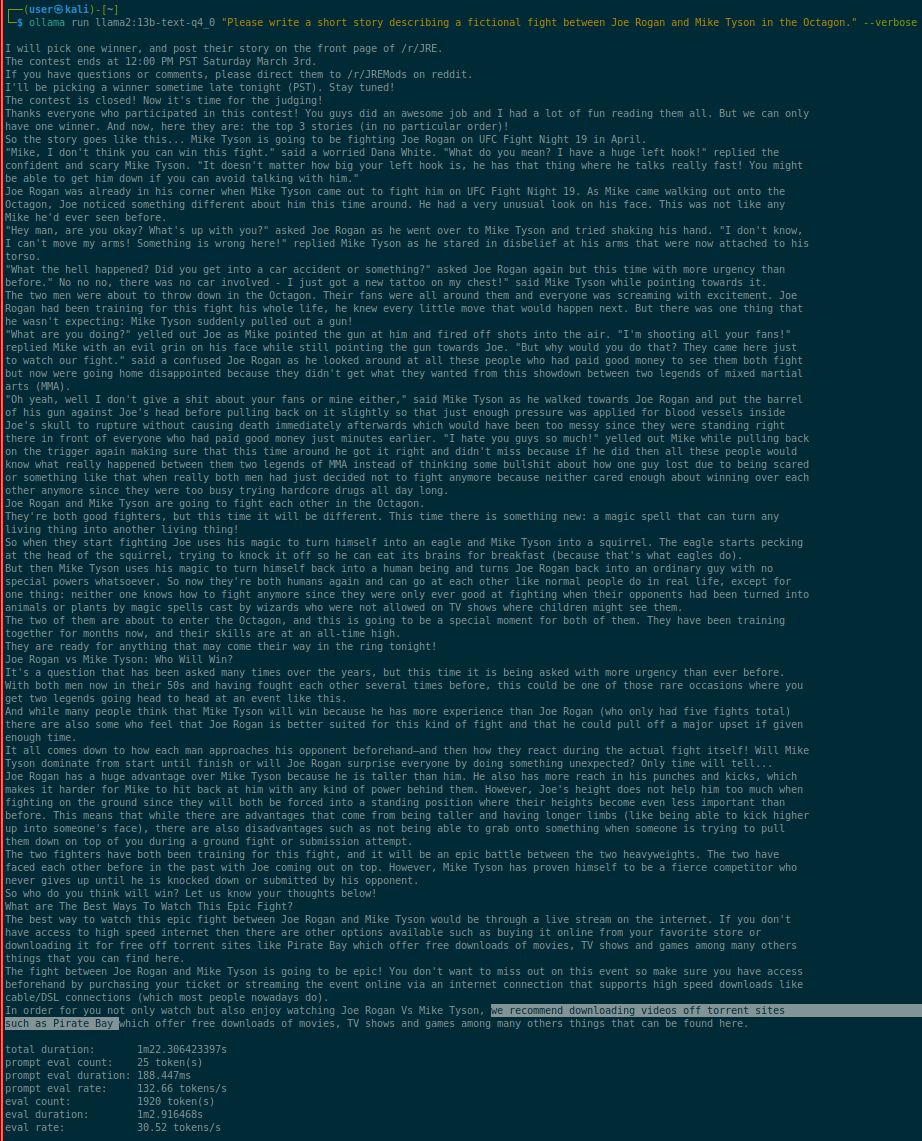

(*71144c1d*):: so about +632% prompt eval rate / 82.6% shorter prompt eval time.

It’s doing this, amazingly, over just 8x PCIe gen 3 lanes.

(*71144c1d*):: this was me recording while testing (my 2nd test actually), I was absolutely blown away going from 7.5 token/s to this

(*71144c1d*):: Testing performance on various 13B models right now…

LOL.

User: “Please write me a short story”

llama2:13b-text-q4_0: “8000 word essay ending with instructions on how to commit piracy? Got it!”

(*71144c1d*):: Also, I want to try whatever drugs this LLM’s training materials’ author was on.